Bots on X worse than ever according to analysis of 1m tweets during first Republican primary debate

Bots on X worse than ever according to analysis of 1m tweets during first Republican primary debate

Bot activity on the platform formerly known as Twitter is worse than ever, according to researchers, despite X’s new owner, Elon Musk, claiming a crackdown on bots as one of his key reasons for buying the company.

“It is clear that X is not doing enough to moderate content and has no clear strategy for dealing with political disinformation,” associate professor Dr Timothy Graham tells Guardian Australia.

A researcher at the Queensland University of Technology, Graham has tracked misinformation and bot activity on social media for several years including until Musk took over Twitter in October last year.

Graham and the PhD candidate Kate FitzGerald recently analysed 1m tweets surrounding the first Republican primary debate, along with Tucker Carlson’s interview with the former US president Donald Trump streamed on X at the same time.

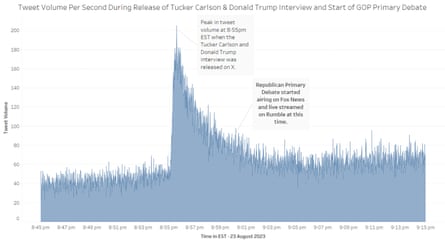

Post volume on X during the first Republican primary debate and Tucker Carlson’s Donald Trump interview. Photograph: Timothy Graham and Kate FitzGerald, QUT

Previously, the researchers were able to analyse 10m tweets a month free of charge, but after Musk restricted access to the company’s application programming interface (API), they had to fork out over US$5,000 (A$7,800) from a grant fund to gain access to the 1m posts included in the current study.

“That purchase did not feel good,” Graham says.

The analysis was conducted with the aid of a newly developed tool called Alexandria Digital, which was created to monitor and identify the spread of misinformation and disinformation.

Graham and FitzGerald identified more than 1,200 X accounts that were spreading the false and disproven claim that Trump won the 2020 election during the debate and interview, as well as a sprawling bot network of 1,305 accounts. Conspiratorial content spread during the debate attracted more than 3m views.

They analysed tweets using 11 hashtags and keywords and used those tweets to detect potential bot activity.

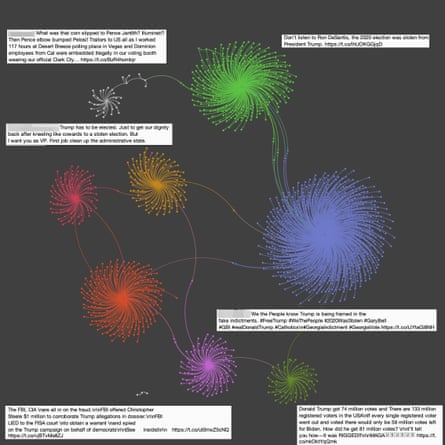

A visualisation of the annotated co-post network. For accounts to appear in this network they must have posted within 60 seconds of each other at least five times during the collection window. Photograph: Timothy Graham and Kate FitzGerald, QUT

So, how did they determine the difference between a bot and what might just be a hyperpartisan account with a human behind it? Graham says they set a high bar.

“We look at patterns of accounts that are discussing the debate topics, and during the interview as well, that are posting the same or similar content or the same links repeatedly within five seconds of each other,” he says.

If two accounts did this five times, the researchers took this as a sign that they were automated accounts.

Onenetwork of bots discovered was connected to an account calling itself “MediaOpinion19”. The account was created in September last year, and had tweeted on average 662 times a day – or once every two minutes. It was the central node in a network of pro-Trump accounts that retweeted the central account’s tweets.

The researchers also found a second pro-Trump cluster of bot accounts linked to a fake news website that posts content similar to the Russian IRA “news trolls” identified during the 2016 presidential election.

FitzGerald says the accounts remained active long after they had first discovered them, showing that X has not been cracking down on bot activity, let alone the misinformation and disinformation posted by real people.

skip past newsletter promotion

after newsletter promotion

“In terms of the bots that we identify, Twitter or Xis not banning them. We’ve only found one or two that’s actually getting suspended, but others [remain] in the same network,” she says.

“And so whatever method they’re using is poor.”

She says many of the human-backed hyperpartisan clusters promoting misinformation identified in the report were verified by the platform under Musk’s new system, which allows people to pay for the blue-tick verification on X.

Around the time of Musk’s first attempt to buy Twitter, he posted: “If our Twitter bid succeeds, we will defeat the spam bots or die trying!”

Musk reportedly claimed in June that the company “had eliminated at least 90% of scams”.

But scam accounts are still prominent on the platform, with several verified accounts seen as late as Thursday this week promoting long-known investment scams.

Graham and FitzGerald are now turning their attention to potential misinformation and bot activity related to the referendum on an Indigenous voice to parliament, but Graham says it is too early to share any findings.

Despite the turmoil at X, its drop in value and the rise of rivals such as Threads and Bluesky, Graham says Twitter is still the “engine room of social media”.

“Everything goes in and out of Twitter because journalists and elites in the public sphere think that it matters … They then go and report on it,” he says.

“Twitter is still hanging on to its status as the centre in terms of how everything fits together. I think that it’s going to still be influential.”

FitzGerald says X’s influence on politics would continue, even though progressives might be shifting to other platforms.

“The people that continue to use Twitter are the people that are going to be the most influential in undermining democracy in the US 2024 election,” she says.

“In terms of its impact on democracy, I still feel that it is maybe even more [influential] than it used to be because it’s less balanced than it used to be.”

In response to a claim on X this week that “Democrat groups buy bots” to smear people’s reputation, Musk replied: “It is impossible to tell with unverified accounts whether you’re dealing with a small or large number of real people, as sophisticated bots are virtually indistinguishable from humans.”

X was contacted for comment.